Hello there! Welcome to my personal portfolio!

I am Iftekhar Tanveer—a computer scientist interested in natural language processing and machine learning. I work as a Research Scientist at Spotify Research.

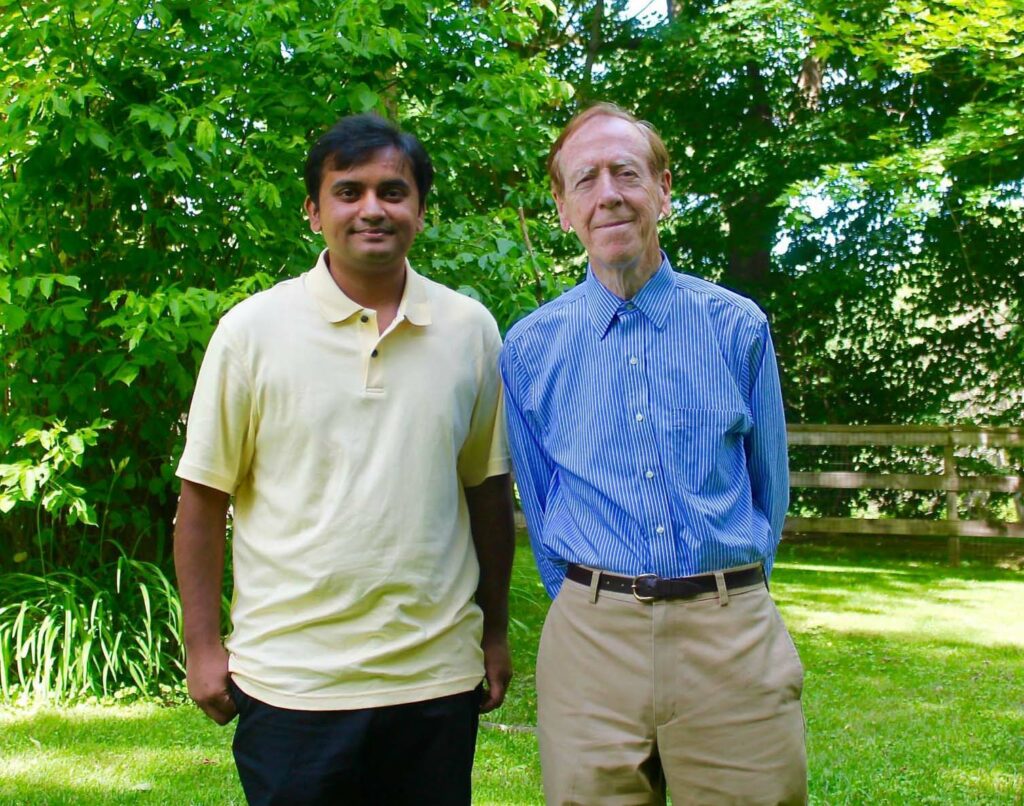

I did my Ph.D. at the University of Rochester. My academic advisor was Professor M. Ehsan Hoque. I was also humbled by getting the opportunity to work with Professor Henry Kautz, Professor Daniel Gildea, Professor Ji Liu, and Professor Gonzalo Mateos. I am originally from Bangladesh.

Highlighted Publications

Lightweight and Efficient Spoken Language Identification of Long-form Audio, Interspeech’23, Dublin, Ireland

Unsupervised Speaker Diarization that is Agnostic to Language, Overlap-Aware, and Tuning Free, Interspeech’22, Incheon, Korea

FairyTED: A Fair Rating Predictor for TED Talk Data, AAAI’20, NY, USA

UR-FUNNY: A Multimodal Language Dataset for Understanding Humor, EMNLP’19, Hong Kong, China

A Causality-Guided Prediction of the TED Talk Ratings from the Speech-Transcripts using Neural Networks, arXiv preprint arXiv:1905.08392

SyntaViz: Visualizing Voice Queries through a Syntax-Driven Hierarchical Ontology, EMNLP’18, Brussels, Belgium, 2018

Awe the Audience: How the Narrative Trajectories Affect Audience Perception in Public Speaking, CHI’18, Montreal, Canada, 2018

AutoManner: An Automated Interface for Making Public Speakers Aware of Their Mannerisms, IUI’16, Sonoma, CA, USA, 2016

Unsupervised Extraction of Human-Interpretable Nonverbal Behavioral Cues in a Public Speaking Scenario, ACMMM’15, Brisbane, Australia, 2015

Automated Prediction of Job Interview Performance: The Role of What You Say and How You Say It, FG’15, Ljubljana, Slovenia, 2015.

News and Presentations

| Date | News | Supplementary Materials |

|---|---|---|

| Aug 21st, 2023 | My second paper at Spotify is published at Interspeech 2023. It is on Spoken Language Identification (SLI) for podcasts. SLI is a task of identifying what language being spoken from audio only. Our method is applicable to really long audios (e.g. podcasts) and allows multiple languages being spoken in the same audio. | Paper, Spotify blog |

| June 15th, 2022 | My first paper after joining Spotify has been accepted for presentation at Interspeech 2022. It is a paper on speaker diarization, which is the problem of identifying “who spoke where” in long audio. In this paper, we propose a speaker diarization technique that is agnostic to language, overlap-aware, and tuning free. | Paper, Spotify blog |

| Aug 3rd, 2020 | I joined Spotify as a research scientist | |

| Nov 10th, 2019 | Our FairyTED paper was accepted in AAAI 2020!!! After reading Judea Pearl’s “The Book of Why”, I realized that neural networks alone can’t do a bias-free and fair prediction. It is important to model the data generating process (possibly using causal models). I tried to convince Rupam, Ankani, and Soumen regarding the importance of the problem and, together, we pulled the paper off. I’m really very proud of this contribution. | Paper |

| Aug 13th, 2019 | Our UR-Funny paper got accepted in EMNLP 2019 | Paper |

| Oct 27th, 2018 | I presented in the lab on Judea Pearl’s new book “The Book of Why”. My advisor, Ehsan Hoque tweeted that and Judea Pearl himself commented on that tweet! | Tweet, Slides |

| Oct 22nd, 2018 | I’ve successfully defended my PhD thesis | Pictures, Slides |

| Aug 6th, 2018 | One of my work during the internship in Comcast Lab is accepted in EMNLP 2018 | Paper |

| April 25, 2018 | I presented our TED Talk work in CHI 2018 | Slides |

| Feb 16, 2018 | I presented Chapter 5 from the Deep Learning book in Lab | Slides |

| Jan 2018 | Our paper got accepted in CHI 2018 |